- What is A/B Testing in Email Marketing?

- How can you use A/B testing for Email marketing?

- How to do email A/B testing? Five major steps

- How to set up the A/B testing email campaign?

- How long to run email A/B test?

- What mistakes should not be made while A/B testing your emails?

- Eight email A/B testing best practices

- Summary about email campaign testing

You definitely know the importance of email campaigns in reaching and engaging your audience. But with so many options available for subject lines, content, and design, how do you know which version will perform the best? A/B testing can help you with this question.

Email marketing A/B test, also known as split testing, allows you to compare two versions of an email to see which performs better. By testing different elements of your email campaigns, you can optimize for higher opens, clicks, and conversions.

In this article, we'll dive into the ins and outs of A/B testing in email marketing, including a guide to set up that helps you create your own tests, common mistakes to avoid, and get email testing best practices for maximizing the effectiveness of your campaigns.

Let’s start.

What is A/B Testing in Email Marketing?

A/B testing email is a way to compare two versions of an email marketing campaign to determine which performs better. This can be done by sending one version of the email to a small group of recipients and the other version to a different, similarly-sized group.

By comparing the engagement rates of the two groups in A/B testing email campaigns, you can determine which version of the email is more effective and use that information to improve your future campaigns.

How can you use A/B testing for Email marketing?

You can use A/B email testing to enhance the performance of your email marketing campaigns, leading to higher engagement and conversion rates and, ultimately, a higher return on investment.

Testing helps you compare the new and old components of the letter and choose the more effective one. This way, you can test different ideas and find the most effective ones for your subscribers.

How to do email A/B testing? Five major steps

There are several steps included in conducting an email marketing A/B test for your email marketing campaigns:

- Step 1. Identify the element

- Step 2. Set conditions for calculating the best option

- Step 3. Create A/B test emails

- Step 4. The stage of launching email tests

- Step 5. Measure the engagement rates of the two groups

Before starting, choose the best email marketing service. Tools for email campaign testing – GetProspect, Woodpecker, HubSpot, etc.

And until conducting A/B testing, it is crucial to verify the validity of your contact list to ensure successful delivery and maintain a good reputation for your domain. This will help you reach all intended recipients and improve your delivery rate.

Having verified your contact list, you will be working only with valid addresses and avoid wasting time and resources trying to reach out to inactive or non-existent email accounts.

Start checking nowHow to set up the A/B testing email campaign?

Now let's look at each step in more detail with email A/B testing ideas.

1.Identify the element for your email tests

This could be

- the sender name,

- the subject line,

- the call-to-action.

It can be any other aspect of the email you think might influence its effectiveness.

-

About email tests: the sender name

The «from name» in an email is the name that appears to the recipient as the sender of the email.

It's essential to make sure that the «from name» clearly indicates that the email is from your company. While you may want to experiment with different «from names», it's important to avoid anything that seems spammy or too unusual, as it may be confusing or off-putting to the recipient.

-

About email tests: the subject line

The subject line of an email is a crucial factor in determining whether or not the recipient will read it. To increase the chances of your emails being opened, you may want to try experimenting with different subject line styles, lengths, tones, and placements.

How can you A/B test subject lines for your emails?

Here are a few additional examples of experimenting with subject lines.

A/B testing email: Add personalization to the subject line

Adding the recipient's name or location to the subject line can make the email feel more relevant and customized.

Data from Campaign Monitor shows that personalized subject lines are 26% more likely to be opened than non-personalized subject lines. This suggests that A/B testing different subject lines, including personalized ones, can effectively improve email performance.

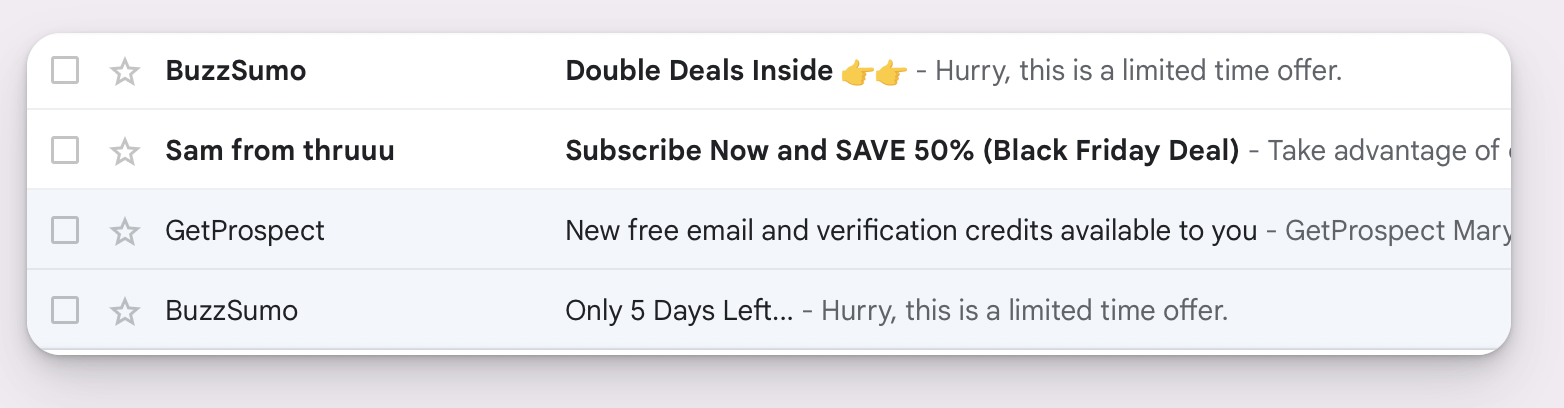

A/B testing email: Add urgency to the subject line

Creating a sense of urgency or scarcity in the subject line can encourage the recipient to open the email immediately.

For example, «Limited time offer: 50% off for the next 24 hours!»

A/B testing email: Add curiosity to the subject line

Piquing the recipient's curiosity with an intriguing or mysterious subject line can also be effective in getting them to open the email.

For instance, «You won't believe what we found in our latest survey.»

A/B testing email: Add emotional appeal to the subject line

Emotional appeal: Using emotional language or evoking strong feelings in the subject line can also be effective in getting the recipient to open the email.

For example, «Your support can make a difference in a child's life»

A/B testing email: Add clarity to the subject line

Clarity: It's important to ensure the subject line accurately reflects the content of the email. Hence, the recipient knows what to expect when they open it. This can raise the likelihood of them opening the email.

Remember. These are just a few instances and other factors to consider when crafting an effective subject line. The key is to test different approaches and see what works best for your specific audience.

-

About email tests: the call-to-action

Calls to action (CTAs) are crucial elements of email marketing campaigns. They help guide the reader in a specific way, such as by clicking a link or filling out a form. By including a clear CTA in your emails, you can increase the likelihood that the reader will take the desired action.

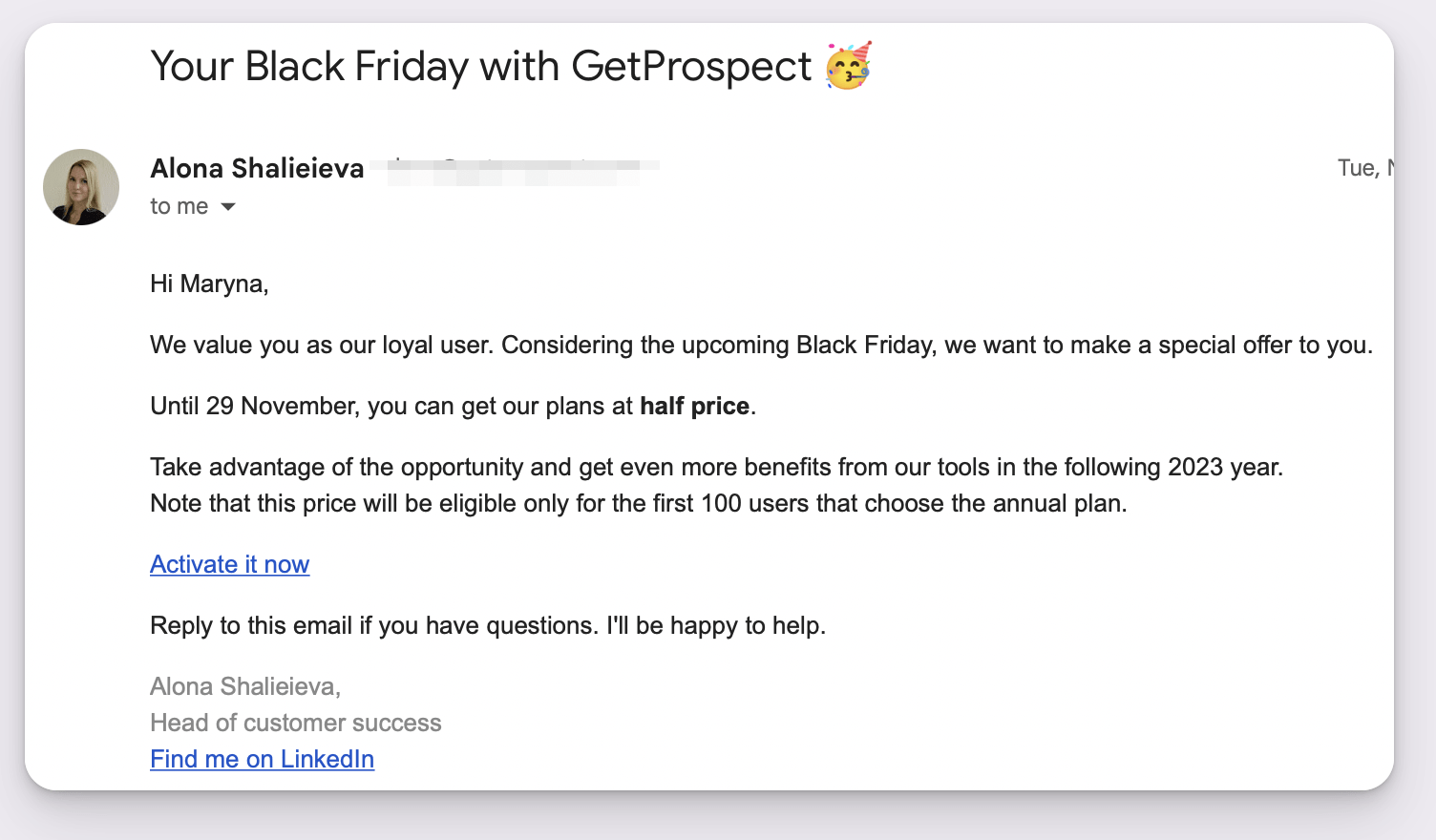

For example, our GetProspect team effectively uses CTAs in their email campaigns to drive click-throughs.

It's a good idea to test different versions of your CTAs to determine which ones are most effective.

2. To create A/B test emails, you need two templates

We prepare simple templates that you can use to create your own.

A/B testing ideas for B2B mailings: from subject lines to timing

-

Examples of the subject line for email marketing testing

|

A) Improve your efficiency with our new product |

|

B) Boost your productivity with our latest solution |

-

Examples of personalization for email marketing testing

|

A) Subject line: «Hi [First Name], check out our new feature» |

|

B) Subject line: «Introducing our latest feature: [Feature Name]» |

-

Examples of CTA for A/B testing email marketing

|

A) Learn moree |

|

B) Request a demo |

-

Examples of layout and design for email marketing A/B testing

|

A) Single-column layout with a professional header image |

|

B) Two-column layout with a more casual header image |

-

Examples of segmentation for email marketing testing

|

A) Email sent to managers |

|

B) Email sent to individual contributors |

-

Examples of timing for email marketing testing

|

A) Email sent on Tuesday at 10 am |

|

B) Email sent on Thursday at 2 pm |

Remember. Each version A/B testing email needs to be with a different value for the element you are testing.

3. Set conditions for calculating the best option

Once you have defined the parameter by which you will be testing (for instance, if you have created two versions of an email with different headlines), you need to set the conditions for calculating the best option. These conditions can be deliverability, open rate, click rate, and the number of unsubscribes.

4. The stage of launching email tests

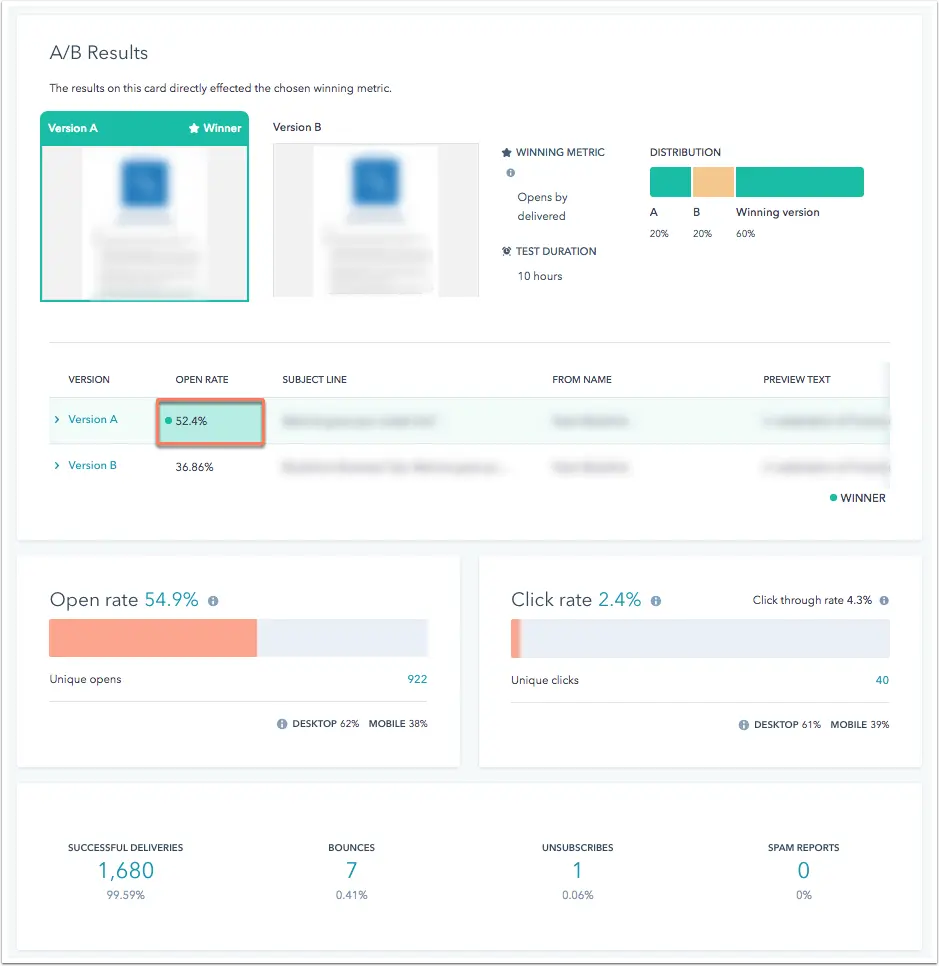

At this stage, you need to select, for example, 30-40% of subscribers from the total base and divide them equally between options A and B. After that, you can start testing.

5. A/B testing campaign monitor

When testing is complete, compare the results and send an email with the highest metrics to the remaining subscribers (60-70%).

How long to run email A/B test?

Running the test for at least a week is recommended to ensure that you have a large enough sample size to draw meaningful conclusions from the results. It may also be helpful to run the test during a high email engagement period, such as during weekday business hours.

When conducting email campaign testing, it is essential to run it for sufficient time to gather reliable data. It depends on several factors, such as the size of your email list and the expected response rate.

The key is to run the test long enough to gather reliable data but not so long that you lose momentum or interest in the results.

How may the length of email tests vary based on different factors?

- If you have a small email list with a low expected response rate, you may need to run the test for longer to gather enough data to draw meaningful conclusions. In this case, running the test for two weeks or more may be helpful.

- If you have a large email list with a high expected response rate, you may be able to gather enough data in a shorter amount of time. In this case, running the test for a week or even a few days may be sufficient.

- If you run the test during a low email engagement period (such as over the weekend), you also may need to run the test for longer. In this case, running the test for at least a week or more may be necessary.

- If you are running email tests during a high email engagement period (such as during business hours on weekdays), you may be able to gather enough data much more quickly. In this case, running the test for a few days or even a single day may be satisfactory.

What mistakes should not be made while A/B testing your emails?

- Not setting clear goals for your test: Before you start, you must clearly know what you want to achieve. This will help you determine which elements of your email to test and how to measure its success.

- Testing too many variables at once: When you test multiple variables simultaneously, it can be difficult to determine which element is responsible for changes in performance. It's better to test one variable at a time so you can clearly see the impact of that specific change.

- Not having a large enough sample size: A small sample size can lead to inaccurate results, so it's important to test your emails on a large enough group of recipients.

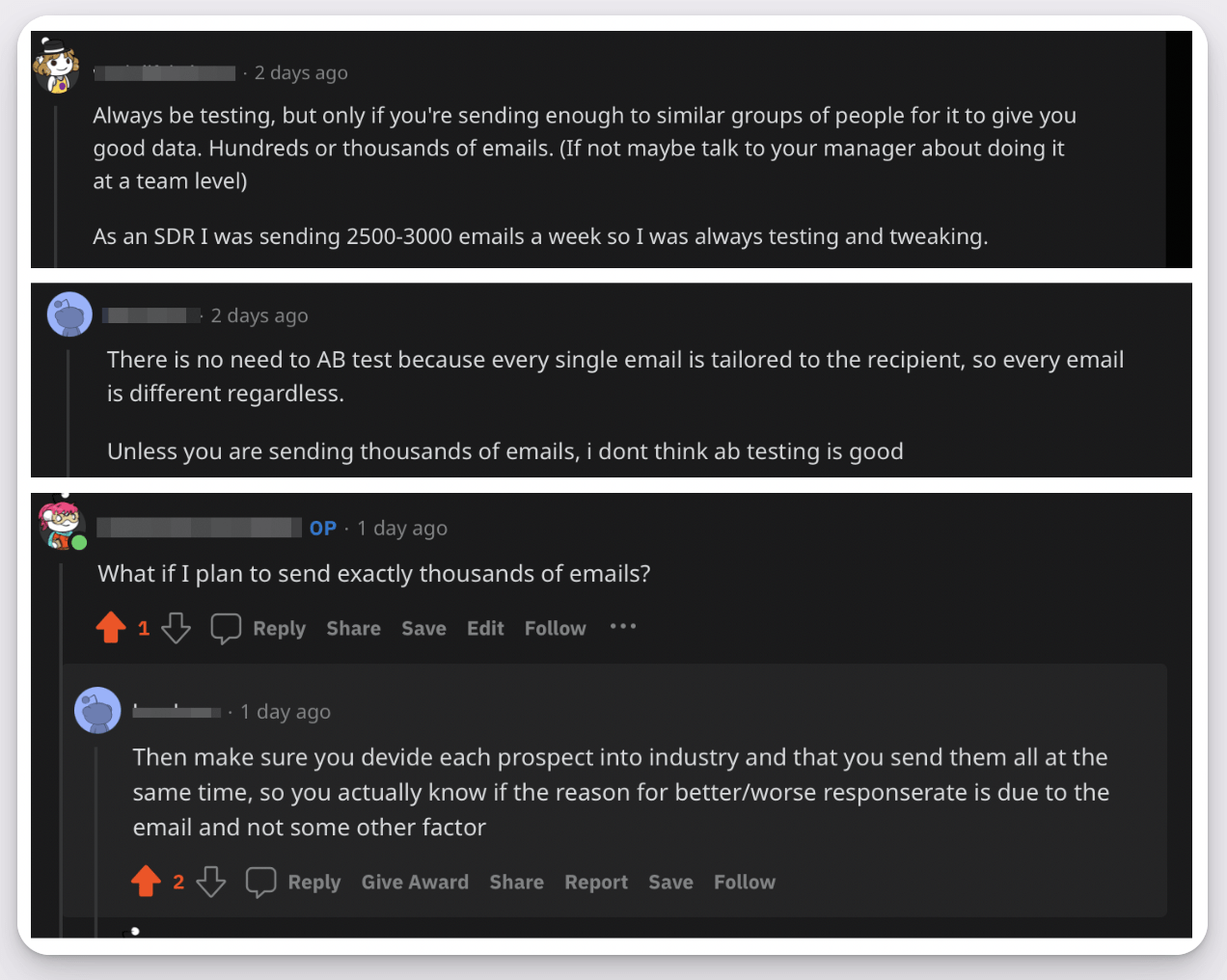

We asked users on Reddit who are in the Sales group with 217k members about critical points in email A/B testing, and several users highlighted this point. So it is especially important to consider that the mailings should be for many leads/clients.

-

Not testing long enough: A/B tests should run for sufficient time to allow for meaningful results. This will depend on the size of your email list and the nature of your emails, but it's generally recommended to test for at least a week or two.

-

Not analyzing the results properly: It's important to thoroughly analyze the results of the A/B testing of your emails and not just rely on superficial metrics like open rate. Look at the data in context and consider the overall performance of your email campaign.

-

Not following up on the results: It's not enough to just run an A/B test and then forget about it. Use the information you’ve got to make edits and improvements to your email campaigns, and then continue testing to see if those changes have a positive impact.

Eight email A/B testing best practices

- Test one variable at a time

- Use a control version for comparison

- Start A/B testing your emails in parallel with each other

- Check for statistical significance when you email A/B testing

- Staying ahead of the competition through constant A/B testing your emails

- Define your audience & Use a random sample

- Use a consistent testing schedule

- Analyze you A/B test email results

Test one variable at a time

By testing only one variable at a time, you can accurately determine the impact that variable has on your email's performance.

For instance, if you want to raise clicks on your email, you might be tempted to test multiple variables simultaneously, such as the design of your call-to-action button and the images in your email body. However, if both of these variables are changed simultaneously, it's difficult to determine which caused the increase in clicks.

When you start preparing A/B tests on your email campaigns, you need to create two versions of your email: one with the variable you want to test and one without; this allows you to compare the performance of each version and determine the impact of the individual variable.

Use a control version for comparison

Using a control version when you are A/B testing your emails is an important best practice because it provides a reliable baseline for comparison.

The control version is the original email that you would have sent without conducting any testing. By using it, you can compare the performance of your test versions to a known baseline, which helps to reduce the impact of confounding variables.

Confounding variables are factors you can't control but can affect your test's validity. For example, if one of your email recipients is on vacation and doesn't have access to the Internet during your email A/B testing, this could impact the results.

Using a control version helps to eliminate as many confounding variables as possible, ensuring that your results are accurate and reliable. It also provides an accessible variable, which makes it easier to see the lift (improvement) that the test version has achieved.

Start A/B testing your emails in parallel with each other

Testing simultaneously is a best practice when A/B testing your email campaigns because it helps to account for changes in customer behavior and product offerings.

Throughout the year, retailers often experience seasonal highs and lows, and customers' behaviors can change as well. Additionally, changes to your product catalog can also impact your email marketing efforts. By running your tests in parallel with one another, you can account for them and ensure that your results are accurate and reliable.

Testing simultaneously can also help reduce the impact of confounding variables, as you're testing all versions simultaneously rather than sequentially. This can provide a more accurate picture of the effectiveness of your test variables.

Check for statistical significance when you email A/B testing

Checking for statistical significance when you A/B testing email campaigns is essential because it helps to ensure that the results you're seeing are meaningful and not just due to random chance or error.

In order to determine statistical significance, you can calculate the p-value, which represents the probability that your test results could be explained by random chance or error. Generally, a p-value of 5% or lower is considered statistically significant, meaning this probability is low.

Note. Achieving a p-value of 5% or lower may take a few weeks, depending on the number of emails your triggered email program sends. This is because you need a sufficient sample size to determine statistical significance accurately.

Staying ahead of the competition through constant A/B testing of your emails

Continuously challenging through new tests is an important best practice when A/B testing your email campaigns because it allows you to continually optimize your efforts and stay up-to-date with changing trends and audience behaviors.

Many aspects of an email can be tested for optimization, such as the subject line, layout, use of images, and call-to-action.

By constantly thinking of new variables for A/B testing email, you can continually improve the effectiveness of your emails and stay ahead of the competition.

For example, you can test different subject line lengths, tones, and levels of urgency to see which ones drive the most opens and clicks. You can also test different layout options, such as the placement of images and buttons, to see which ones are most effective at driving conversions.

Define your audience & use a random sample

Defining your audience when A/B testing email helps you to target the right people with the right message.

Behavioral data can be especially valuable when it comes to choosing the right target audience for your tests. By analyzing your customers' behaviors, you can create segments based on factors such as purchase history, engagement levels, and geographic location. This allows you to launch more targeted campaigns that are more likely to be successful.

Once you've defined your audience, it's essential to split them in a random way for testing purposes. This ensures that you have a representative sample of your email list, which helps to provide accurate and reliable results.

Use consistent A/B testing in your emails schedule

Establish a regular testing schedule to keep your email marketing efforts fresh and up-to-date.

Trends and audience behaviors are constantly changing, so it's important to analyze and adjust your strategies to succeed continuously. By regularly testing different variables, you can stay ahead of the curve and ensure that your emails perform at their best.

Note. The frequency of your email A/B testing schedule will depend on your resources and the size of your email list. You'll need to find a balance that allows you to conduct enough tests to make meaningful improvements while leaving enough time to analyze the results and implement any necessary changes.

Analyze your A/B test email results

Analyze and learn from your results. As we said early, after conducting A/B testing your emails, it's important to carefully analyze what you get and look for trends and insights that can inform your future email marketing efforts.

This can help you identify improvement areas and make necessary adjustments to optimize your emails for success. It's also necessary to consider the context in which your tests are conducted.

For example, if you're testing a new subject line and see a significant rise in opens, it's crucial to consider what other factors may have contributed to this result.

Was there a particularly newsworthy event that may have drawn more attention to your emails? Did you send A/B test email at a time when your audience was more likely to be engaged?

So, by following these email A/B testing best practices and continuously analyzing and learning from your results, you can make informed decisions about your email marketing strategy and improve the performance of your emails.

Summary about email campaign testing

- A/B testing email is a way to compare two versions of an email marketing campaign to determine which performs better.

- You can use A/B email testing to improve the performance of your email marketing campaigns, leading to higher engagement and conversion rates and, ultimately, a higher return on investment.

- The several steps involved in conducting an email A/B test: identify the element you want to test; create two versions of the email; set conditions for calculating the best option; the stage of launching email tests; measure the engagement rates of the two groups.

- When conducting email campaign testing, it is essential to run it for a sufficient amount of time to gather reliable data.

- Mistakes that you shouldn’t make: not setting clear goals for your test; testing too many variables at once; not having a large enough sample size.

- Top email A/B testing best practices: use a control version for comparison, start testing emails in parallel with each other, check for statistical significance, stay ahead of the competition through constant A/B testing of your emails, define your audience & use a random sample, etc.